Spliced feed for Security Bloggers Network |

| ohrwurm - RTP Fuzzing Tool (SIP Phones) [Darknet - The Darkside] Posted: 23 Sep 2008 04:26 AM CDT ohrwurm is a small and simple RTP fuzzer, it has been tested it on a small number of SIP phones, none of them withstood the fuzzing. Features: reads SIP messages to get information of the RTP port numbers reading SIP can be omitted by providing the RTP port numbers, so that any RTP traffic can be fuzzed RTCP traffic [...] Read the full post at darknet.org.uk |

| Lies and Unbiased Product Testing [Episteme: Belief. Knowledge. Wisdom] Posted: 22 Sep 2008 07:43 PM CDT The third party product testing space performs an important mission within the industry - they keep the vendors honest. That mission is why I was so fired up when I took a job at Neohapsis in 2007 running their product testing lab. And, while I left Neohapsis a few months ago, I’m still fired up about product testing and the important role that a truly independent third party can bring to this industry. The flip-side of that emotional intensity is that I get rather upset whenever I hear rumors about someone abusing their stance as an unbiased third party. And I recently heard something that made me ill. I had a source approach me about a product testing firm who he suggested I blog about. This “independent testing firm” apparently does the most blatantly unethical thing I’ve ever heard in that industry: They write the results that their testing will discover IN THE CONTRACT with the vendor who is requesting the testing. It’s one thing to have a “wink-wink, nudge-nudge” sort of relationship with a vendor. It’s another to tilt the test criteria slightly, or even to accept the vendor’s claims as solid assumptions during your testing. Both of those annoy me, but they’re the kinds of things that go on in most organizations (though they did not happen under my watch at Neo… I’m too much of an idealist and a pain in the ass). But when you have the outcome written in to the contract? In my mind, that’s fraud if you’re going around pretending to be an unbiased third party. I couldn’t believe that anyone would truly stoop to this level. And, since my source had left the company where he had worked with the testing lab, he couldn’t get me a copy of the contract. So, before I went on-record and wrote this one out, I wanted a bit more proof. I asked my amazing team of Indian VAs to check it out for me and provide me confirmation - not the information, just confirmation. Just get one of the contracts and tell me if the results are in there. Search, investigate, and interview customers of the lab to attempt to prove it one way or another. The response to that investigation? A cease and desist letter to my VAs from the test lab. (Which is why I’m not posting the lab’s name… if I’m too busy to blog, I’m way too busy to bother with lawyers). So, I’ll say it this way - if you’re reading a report about a product from a third-party lab that claims to be unbiased, take it with a grain of salt. Especially if the report came to you from the product vendor’s sales/marketing team. Or you can just ask to see the vendor’s contract with the testing lab, and see if you get handed a cease and desist letter. |

| US government rolling out largest DNSSEC deployment [Voice of VOIPSA] Posted: 22 Sep 2008 03:40 PM CDT It’s not “VoIP security”-related, but this piece in NetworkWorld today is worth a read: “Feds tighten security on .gov“. Here’s the intro: When you file your taxes online, you want to be sure that the Web site you visit — www.irs.gov — is operated by the Internal Revenue Service and not a scam artist. By the end of next year, you can be confident that every U.S. government Web page is being served up by the appropriate agency. The article goes on at some length into what the US government is doing, the issues involved and why it all matters. From a larger “Internet infrastructure” point-of-view, actions such as securing the DNS infrastructure will only help in securing services such as VoIP. There’s still a long way to go to getting DNSSEC widely available, but I applaud the US government for helping push efforts along. FYI, the article references the obsolete RFC 2065 for DNSSEC. For those wishing the read the standard itself, DNSSEC is now defined in RFC’s 4033, 4034 and 4035 with a bit of an update in RFC 4470. |

| VOIPSA blog upgraded to WordPress 2.6.2… [Voice of VOIPSA] Posted: 22 Sep 2008 03:30 PM CDT We’ve upgraded this site to WordPress 2.6.2… there shouldn’t be any issues with the site, but please let us know if any pages act strange. Thanks, Dan |

| The night the lights went out on Broadway [StillSecure, After All These Years] Posted: 22 Sep 2008 02:22 PM CDT I could not let the occasion pass without comment. Last night one of the longest running and most successful "hits" in sports, the NY Yankees played their last game in old Yankee Stadium. I for one always felt the the baseball spirits lived at the "house that Ruth built". The magic could pop out at any time, but come October and playoff times, was there any better place to watch a baseball game (OK maybe it was cold in October). Last nights ceremonies before and during the game reminded me of just how many great players have played on that field and how many of baseballs greatest moments happened there. Forget for a moment the most recent Yankee dynasty and the Red Sox rivalry, but Don Larsen's perfect game, Mickey Mantle, Roger Maris, Yogi Berra and Whitey Ford. From Babe Ruth, Lou Gehrig and Joe Dimaggio, all the way down to Thurman Munson, Reggie Jackson and Derek Jeter - the Yankees have a tradition second to none. But so much of that is tied up in the stadium. Babe Ruth hit the first home run there and the Yankees won that game. Jose Molina hit the last home run there last night and the Yankees won that game too. Lets hope that tradition of winning is transferred to the shiny new Yankee Stadium they built across the street! Related articles by Zemanta |

| Interesting Information Security Bits for 09/22/2008 [Infosec Ramblings] Posted: 22 Sep 2008 01:59 PM CDT Good afternoon everybody! I hope your day is going well. Here are today’s Interesting Information Security Bits from around the web.

That’s it for today. Have fun! Kevin Posted in Interesting Bits |

| OPC UA: Part 4 - SDK Vulnerabilities [Digital Bond] Posted: 22 Sep 2008 01:22 PM CDT In the OPC UA SDK assessment, Digital Bond analyzed the OPC UA source code and binaries from the SDK. It should be noted that the source code will be unavailable to most OPC Foundation members. As mentioned in Part 1 the overall code quality was quite good, but there were a small number of important findings including heap and stack overflows. Some of these overflows were in orphaned code, that should nevertheless be removed, and would not have affected an OPC UA application. Details on all of the identified vulnerabilities in the code have been provided to the OPC Foundation, and we anticipate they will be fixed in the next revision of code. Perhaps the most serious finding came from black box testing using the Server Test Application provided with the SDK. In this case, the team used the provided Server Test Application as a 'fuzzer' caused the OPC UA server to crash, typically after three to ten connections. It is common for attackers to use and enhance test tools as attack tools, so this must be considered a likely attack. We also analyzed, to the degree possible, the security component of the development teams’ software development lifecycle. Recommendations for improvement included automated source code testing, using compiler security options (such as memory randomization at load time, stack canaries and C# microcode obfuscation) and implementing security checklists. WARNING 1. OPC UA client and server applications will have significant vendor specific application code, some of which will call this SDK. Some vendors will have a secure development process with minimal, or at least minimal easily exploitable, vulnerabilities. If history is a guide, most will not have significant security in the development process and introduce vulnerabilities. This is similar to what we saw from Mora’s and Langner’s 2007 S4 papers on OPC vulnerabilities. Same OPC protocol implementations, even interoperable, but very different quality of implementations from a security perspective. 2. More extensive black box testing could and should be done when more representative OPC UA client and server applications are available. See the rest of the OPC UA blog series:

|

| tcp/32709 solved? [Kees Leune] Posted: 22 Sep 2008 09:52 AM CDT About a week ago, I put up a quick post asking if anyone knew what kind of traffic is directed at tcp/32709. Since there did not seem to be much known about this port, I did some snooping around myself. I fired up a simple netcat port listener on port 32709 and waited for incoming connections: $ nc -vvlp 32709 |tee 32709.log While mostly binary, there are some clues in here. Specifically [CHN] and [VeryCD]. Given the fact that all the scans that I detected originated from Chinese IP ranges, I suspect that [CHN] stands for "Chinese". Not sure if it is Chinese encoding, Chinese language, or something else. The second hint is [VeryCD]. A quick Google for VeryCD turns up that this is an "eMule based Chinese P2P media directory". Some more Googling reveals that VeryCD clients are insanely aggressive in probing for new file-sharing servers. I suspect that this is exactly the behavior that hit my machine. For now, I'll keep the following networks blocked; it took away just about any and all portscanning that was hitting me (that is; that I detected ;) 59.62.0.0/15 And yes, that does represent an aweful lot of hosts that can longer read my blog. I'll live with that; there are plenty legitmate sources as well as illegitimate sources that carry my content. For the time being: case closed. |

| Mark Collier and SecureLogix release new VoIP security tools [Voice of VOIPSA] Posted: 22 Sep 2008 09:30 AM CDT In a message to the VOIPSEC mailing list over the weekend, Mark Collier announced the release of a new suite of VoIP security test tools. Mark, as you may recall, is the co-author with (VOIPSA Chair) David Endler of the book “Hacking Exposed: VoIP” and as part of the book publication he and Dave made available a series of voip security tools through their hackingvoip.com website. Now, Mark’s back with a second version of those VoIP security tools. He describes the new tools in one blog post on his VoIP security blog and announces their availability in a second blog post. Here’s his description of new tools:

Interestingly, the tools are not being made available through Hackingvoip.com but rather directly from SecureLogix’s web site, where you have to register first to download the tools. Mark also provides a PowerPoint presentation about the “Call Monitor” tool he mentions here. He’d mentioned this tool to me once before when we met at one of the conferences…. basically it provides a “point-and-click” interface to allow you to inject or mix in new audio into existing audio streams. Making it this easy is definitely a scary prospect (and another good argument for why you should be using SRTP to encrypt audio streams). Anyway, the new tools are now out there if you want to try them out. (Joining the long list of existing VoIP security tools.) Technorati Tags: |

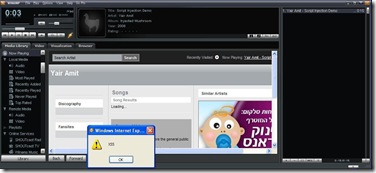

| Winamp NowPlaying Unspecified Vulnerability: The Details [IBM Rational Application Security Insider] Posted: 22 Sep 2008 09:23 AM CDT Hey, Since no information has yet been published about a vulnerability I recently discovered in Winamp, and the issue has raised some interest, here are the details. Recently, while listening to some music via Winamp (my favorite media player), I recalled an old Winamp Buffer-Overflow vulnerability found by Leon Juranic in 2005. Knowing that Winamp uses an embedded browser in various places, I decided to take a closer look at it and see what could be done... ;) Winamp's "Now Playing" feature uses an embedded browser to present information about the currently played media file. When the user plays a media file, some of the file's metadata is embedded into the HTML that the embedded-browser displays. It turned out that if the metadata (id3 tags) of the mp3 file contained a JavaScript payload, Winamp failed to sanitize it and therefore injected it intact into the embedded browser – a feasible XSS exploitation scenario.

Furthermore, due to the integration between the embedded browser and the Winamp application, this script injection vulnerability has some unique characteristics. In many cases, Desktop applications that utilize IE embedded browsers render the HTML content in a highly privileged zone called "My Computer Zone". This zone allows the programmer to perform a wide range of actions on the computer and thereby to "interact" with the hosting application. The downside of this (fairly common) approach is that if the application is susceptible to XSS, a malicious attacker might be able to exploit it and gain full system control over the victim's system. (Aviv Raff did some great work in this field, "Skype cross-zone scripting vulnerability" being a well-known example.) Winamp's programmers were probably aware to this security threat and therefore chose a different approach. Instead of creating and loading a local file that contains all the relevant data (e.g. data about the song retrieved from the Internet and data originated from the id3 tags of the mp3 file) in a privileged security zone, Winamp loads the embedded browser with a page located in http://client.winamp.com (Internet URL - non-privileged zone). At first glance, this seems to make an XSS attack innoxious, because of Same Origin policy. However, in order to implement the required interaction between the Winamp application and its embedded browser, a bridge from the browser to Winamp was created in the form of window.external. That means that JavaScript code (which is attacker-controlled due to the XSS vulnerability I found) could trigger various internal functionalities of Winamp which were not intended to be invoked by a remote attacker. The attack could then be taken one step ahead, by trying to identify functions that are susceptible to memory-based attacks or logical attacks (Reading/Writing of information from/to the victim's host and even gaining full system control in the form of executing commands). Since the attack is almost undetectable (the malicious JavaScript within the id3 tags can be padded with white spaces and therefore rendered invisible to the Winamp user when the malicious mp3 file is played), such a poisoned file could be easily distributed via P2P networks, resulting in a large-scale (and possibly silent) attack against Winamp users.

Acknowledgments: I would like to thank Winamp's team for their quick responses and the efficient way in which they handled this security issue. |

| McAfee takes Secure Computing out [StillSecure, After All These Years] Posted: 22 Sep 2008 08:09 AM CDT McAfee wants to make sure it remains the biggest "pure play" security vendor. One of the larger public pure play's in security, Secure Computing is the latest competitor that McAfee is taking off the board. They have agreed to buy Secure for $5.75 a share. This is quite a premium over the 3.50 to 4.50 range that Secure has been trading in prior to the deal. For me though the Secure stock price was not indicative of Secure's true value. I think it was much less. I think Secure Computing never really recovered from its acquisition of CyberGuard. It never really hit its stride and made the expected for progress after that deal. Yes it continued to acquire businesses, but mostly these were bottom feeding type of deals and didn't add a lot of quality to the mix. Secure's most recent acquisition of Securify was a perfect example. They bought a stagnant growth company doing about 12 million for 15 million, but what to do with it? It seemed that they were just looking to pile up revenue with no real thought to how these all fit together. In any event, now it is McAfee's problem. I like Secure's firewall product line and I think that is something McAfee does not have. As for the rest of the portfolio, it remains to be seen what if any value McAfee will find in it. In the meantime, security consolidation marches on. Related articles by Zemanta |

| Modern Exploits - Do You Still Need To Learn Assembly Language (ASM) [Darknet - The Darkside] Posted: 22 Sep 2008 06:33 AM CDT This is a fairly interesting subject I think as a lot of people still ask me if they are entering the security field if they still need to learn Assembly Language or not? For those that aren’t what it is, it’s pretty much the lowest level programming languages computers understand without resorting to simply 1’s and [...] Read the full post at darknet.org.uk |

| Security News Links for 9/14 [Nicholson Security] Posted: 21 Sep 2008 07:00 AM CDT What impact will the financial industry meltdown have on the security industry? VMWorld 2008: “Introducing Cisco’s Virtual Switch for VMware ESX”… VMWorld 2008: Forecast For VMware? Cloudy…Weep For Security? Virtually excited about virtual IPS SQL injection taints BusinessWeek.com (Cancelled) / Clickjacking - OWASP AppSec Talk "You can't get the toothpaste back in the tube, so deal." The Fallacy of Complete and [...] |

| OPC UA Part 3 Follow Up [Digital Bond] Posted: 20 Sep 2008 10:33 AM CDT As discussed in Part 3, mandating that an OPC UA server validate X.509 certificates prior to using them to create secure channels is essential. It is the foundation that all OPC UA security measures are built upon. Of course whenever you mention certificates and public key infrastructure [PKI] it makes people nervous. Understandably because PKI can be quite complex - - but it doesn’t have to be. A very simple and secure approach would be:

Done So what is the problem with this? Well any new OPC UA client would not be able to immediately be able to connect to an OPC UA server. Is that a bad thing? Another approach would be to:

Of course this requires the OPC UA server vendor add some features to the application, and it still requires the asset owner/operator to take action prior to using a new OPC UA client. The CA is more interesting as an option if an asset owner has multiple OPC UA servers. |

| Interesting Information Security Bits for 09/19/2008 [Infosec Ramblings] Posted: 19 Sep 2008 05:29 PM CDT Good afternoon everybody! I hope your day is going well.

That’s it for today. Have fun!  |

| Friday News and Notes [Digital Bond] Posted: 19 Sep 2008 04:20 PM CDT

|

| I’m not an economist, but… [Episteme: Belief. Knowledge. Wisdom] Posted: 19 Sep 2008 01:22 PM CDT I just read the info on the new US mortgage bailout. I’m bothered. I can’t figure out how this works. I mean, I get the idea - the federal government purchases (and later attempts to sell) “hundreds of billions of dollars” of bad paper. But, if the paper is no good, it means there’s no resale value. So, that hundreds of billions of dollars gets piled on top of the federal debt. It seems to me that, in the medium term, that extra debt exerts further downward pressure on the US dollar against other international currencies. (What’s interesting is that, up to now, most of the US borrowing has been for international and discretionary purposes like war and trade - now we’re borrowing large-scale for domestic purposes. It seems to me that it’s like the difference between borrowing on a credit card to eat at restaurants and borrowing to buy groceries…) As the dollar declines further, the US has a harder and harder time remaining solvent and inflation increases. At that point, more bad paper will emerge (i.e. more mortgage defaults as gas hits $10/gal and a loaf of bread costs $5), making this all get a whole lot worse. This doesn’t seem to be the right way out. USA Today made a fantastic point today - the USA is not following its own counsel. From the article: Throughout more than a decade of recurrent crises in nations such as Mexico, Russia and Thailand, the United States offered the same advice: Let the market solve the problem and get the government out of the way…… …. In the 1990s, officials of the U.S. Treasury and the U.S.-backed International Monetary Fund urged the leaders of crisis-hit countries to embrace market-oriented policies designed to put their economies on sounder, long-term footing. But the recommendations — to slash government spending and privatize bloated state companies — meant genuine pain for millions and thus real political costs for leaders. It seems to me that we’re taking massive short term action to avoid the long term consequences of our actions. It’s like someone who is writing bad checks: you write one, then you write another to cover that one (plus a little more), then another, and another, until, eventually, you can’t write a $1M check to cover everything you’ve done. Unfortunately, as anyone who has piled lie on top of lie to avoid getting caught knows, if you come clean and pay the piper early, the pain isn’t so bad. It’s only by putting it off over and over again that we create a situation that ends up as a disaster. It seems to me that this is just another way of putting off the inevitable. Anyone who has read the story of Japan’s collapse in the 90s knows what eventually happens - you eventually can’t cut the interest rate any further, and can’t borrow any more. At that point, everything comes back in to line with a snap. And putting it off another six months only makes it hurt that much worse. |

| An Information Security Place Podcast - Episode 5 [An Information Security Place] Posted: 19 Sep 2008 12:06 PM CDT OK folks. Here’s the long awaited episode 5 of the the podcast. Sorry for the delay in getting this one out. Hurricane Ike put a big damper on our plans since I was without electricity for a few days. Internet has been spotty as well, but it held up for Jim and I to record last night. Show notes:

Music:

Vet This posting includes an audio/video/photo media file: Download Now |

| OPC UA: Part 3 - Specification Vulnerabilities [Digital Bond] Posted: 19 Sep 2008 12:05 PM CDT OPC UA is a complex, interleaved 12-part specification. To understand OPC UA security one has to read multiple parts of the specification, but we have provided an overview in an OPC UA SCADApedia page that continues to be developed. The specification analysis portion of our assessment report had many findings at the Exposure, Concern and Observation level. This is not unusual given the complexity of the specification, and the effort required to maintain consistency across many different parts of the specification. Also, we want to reiterate that the OPC Foundation is addressing many of these findings, and we will provide the resolution or mitigation when available in Part 7 of this series. So here are the highlights: The biggest issue we found in numerous parts of the OPC UA specification was the security features can provide a high level security, but an OPC UA user could easily have a false sense of security even with a compliant product with security measures turned on. Even worse, the default, out-of-box configuration could be compromised even in an OPC UA server that is billed by a vendor as secure because it is encrypted and signed. X.509 Certificate Infrastructure The specification allows OPC UA client and server applications to accept self-signed and other certificates without any automated or manual process to determine if a certificate should be trusted. It is likely that many vendors, and subsequently many users, will want to avoid the difficulties of deploying a public key infrastructure (PKI) or forcing a manual process to approve new certificates as they arrive at the OPC UA client or server. The result is an OPC UA server, even one requiring encryption and signatures, could be compromised by anyone who is able to gain access to OPC UA client software. The attacker would just have an encrypted and authenticated Secure Channel to pass attacks through to the server. The OPC UA specification should be modified and be very clear that all certificates must be explicitly trusted through a PKI or other process prior to use. This is true for both the OPC UA client and server receiving an unknown application instance certificate. The specification also is unclear and conflicting in places on the requirement to sign and encrypt security critical service messages, most importantly, OpenSecureChannel and CloseSecureChannel messages. It appears at one time the intention was to make security mandatory on these messages even when the Message Security Mode is set to NONE, which would provide a useful minimal level of security prior to any communication, but the specification has not accomplished this due to conflicting information in different specification parts. If Secure Channels are allowed to be created and closed without security the OPC Foundation should modify the specifications to indicate there are Secure Channels and Channels. Again this is a false sense of security issue with someone thinking they are getting security from a Secure Channel even though sign and encrypt are set to none. Other Issues There were many areas in the specification that provided the vendor with implementation choices that would have a dramatic impact on the security of the OPC UA server. We will cover these in Part 5 of the series. These are not necessarily deficiencies in the specification, but a yellow flag for potential OPC UA users that all OPC UA security is not equal. See the rest of the OPC UA blog series:

|

| Surf Jack - Cookie Session Stealing Tool [Darknet - The Darkside] Posted: 19 Sep 2008 05:46 AM CDT A tool which allows one to hijack HTTP connections to steal cookies - even ones on HTTPS sites! Works on both Wifi (monitor mode) and Ethernet. Features: Does Wireless injection when the NIC is in monitor mode Supports Ethernet Support for WEP (when the NIC is in monitor mode) Known issues: Sometimes the victim is not redirected correctly... Read the full post at darknet.org.uk |

| Links for 2008-09-18 [del.icio.us] [Sicurezza Informatica Made in Italy] Posted: 19 Sep 2008 12:00 AM CDT |

| The mother of all bailouts [StillSecure, After All These Years] Posted: 18 Sep 2008 08:54 PM CDT

Similar to what we did with the Resolution Trust Company back in the Savings and Loan crisis (Does anyone remember the Keating 5? Extra points for naming who was the number one of the Keating 5), we will set up a company that banks can sell or transfer all of their bad mortgages too. This way they get all of the bad stuff off of their books and can get back to pillaging the little guys in this country. Speaking of the little guys, where is their bailout? Where is the government to help the people being put out of their homes everyday. It is OK to give trillions of dollars to Wall Street fat cats and bank presidents, but the families being tossed out of their homes get nothing. It just doesn't add up for me. If the banks and Wall Street need relief, so do the individual people who are already suffering from the greed and excess of the last years. I am waiting to see what comes next. In the meantime I am thinking of starting a bank to get my share of the bailout money. Related articles by ZemantaThis posting includes an audio/video/photo media file: Download Now |

| NAC Interoperability - Man or Myth? [StillSecure, After All These Years] Posted: 18 Sep 2008 08:02 PM CDT

Well here is my empty bottle about the myth of interoperability of NAC. The bottle is empty because NAC interoperability is no myth and is very much real. The problem is that most people are waiting for that golden moment when the Sun aligns, the angels sing and interoperability is proclaimed throughout the land. What does interoperability really mean? Does it mean one NAC solution is going to work with another and are interchangeable? I don't think so. Interoperability means to me that all of the moving parts involved in NAC work together across different vendors. My friends we have that now. Call off the hunt for the myth, the reality is here. What NAC interoperability means is can your NAC controller work with switches from different vendors to test and enforce for access control. What NAC interoperability means, is can NAC systems use a soon to be ubiquitous agent, such as the Microsoft NAP agent? If so, we have that today with any TCG compliant NAC. Can NAC systems use just about any DHCP or Radius server? Yes. SNMP or 802.1x? Check. Default supplicants? Yes again. Guys the systems and tools used to install NAC, the switches, VLANs, ACLs, Radius, AD, DHCP servers all work together today. Cisco works with NAP, TCG works with NAP, NAP works with everything else. Stop waiting for the mythical deliverer, the NAC promised land is right here before your eyes. It is just not a StillSecure thing either. Take a look at any of the leading NAC solutions with the exception of Cisco and you will see a high level of interoperability with the network infrastructure components that NAC needs to function. Cisco is another story. My personal belief is that they give lip service to wanting to be interoperable, but frankly they would rather see hell freeze over. With their dominant position in the network market, they want their stuff to work best on their own gear. They want to use that as a reason to use only their equipment and lock you into the Cisco mono-culture. Every other NAC vendor will work with a wide range of network switches and gear. So it is in Cisco's interests to sow myths and misconceptions. To drag their feet in working with other solutions. But other NAC solutions work just fine on Cisco gear. Make no mistake about it. NAC is interoperable right now! |

| Noscript vs SurfJacking [Hackers Center Blogs] Posted: 18 Sep 2008 06:00 PM CDT |

| You are subscribed to email updates from Security Bloggers Network To stop receiving these emails, you may unsubscribe now. | Email Delivery powered by FeedBurner |

| Inbox too full? | |

| If you prefer to unsubscribe via postal mail, write to: Security Bloggers Network, c/o FeedBurner, 20 W Kinzie, 9th Floor, Chicago IL USA 60610 | |

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=3d18f3e4-63ee-4f1b-88d4-5f58eabe61af)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=80569dd5-6082-4dbb-b812-edcd1f3352cd)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=4046601f-bf10-44e0-aab0-2f181b97d703)

Sean Michael Kerner at Internetnews

Sean Michael Kerner at Internetnews

No comments:

Post a Comment