Spliced feed for Security Bloggers Network |

| Wine Review: 2006 Cellarmaster's Riesling from Columbia Winery [The Falcon's View] Posted: 26 Jul 2008 10:17 PM CDT | ||

| Oh boy, jet lag! [Liquid Information] Posted: 26 Jul 2008 06:08 PM CDT Nothing added value in this message, just venting my jet lag frustration. I have been traveling for almost two weeks in the US. Finally back at home. I fell asleep around seven in the evening and got woken up at eleven. Now I don't feel like going back to sleep anymore, even thought my head is a total mess due to the jet lag. It is around 2 o'clock in the morning and I'm just staring at the screen. Wish I wouldn't have been woken up. It would have been much better to wake up at 3-5 in the morning and try to adjust from there than stay up at this hour. Oh well, trying to "fix" things by drinking a few beers, even thought it probably makes things worse ;) In overall, the trip was a success and I hopefully added the required value to the business reason I was in US. It would have been cool if I could have extended the trip to BlackHat Las Vegas and DefCon, but because of normal life appointments and the length of that journey it would have been pretty crazy. Only thing I reveal about the trip is that I had some pizza and bagels in New York and found out that in all the "chaos" there was a certain rhythm which made total sense. I also asked for a NYC specific drink and was given a Cosmopolitan. It was in my opinion quite good, but isn't that more like a girl drink? *shrugs* | ||

| Web Application Security Survey Results [Infosec Events] Posted: 26 Jul 2008 04:06 PM CDT A couple weeks ago, Jeremiah Grossman put together a survey for web application security professionals, and now the results are posted. There were 17 questions, ranging from your general background to rating web vulnerability scanners. There were some funny questions like the HackerSafe one… Safe from Hackers, Safe for Hackers, or Other? Jeremiah also posted his thoughts on the results on his blog. The full report is also online thanks to Robert "RSnake" Hansen. It also has all the comments from the web application security professionals survey, and they are quite interesting to read. Thanks to Jeremiah for putting together the survey, and thanks to all that participated. | ||

| Down For Maintenance... [PaulDotCom] Posted: 26 Jul 2008 12:28 PM CDT Between Larry on vacation and me moving my office around in the house and doing some general clean-up, we will have to skip this week's podcast. I was also traveling to SANSFIRE to do some teaching, which made the week a bit hectic as well. We will be back to our regular security monkey selves this coming week. However, check me out on the Typical Mac User Podcast this Sunday, where I will be discussing how to secure Mac OS X! PaulDotCom | ||

| Symposium On Usable Privacy and Security [Infosec Events] Posted: 26 Jul 2008 01:26 AM CDT The three day Symposium On Usable Privacy and Security finished today. We were not able to attend this event, but from the program information, there was some interesting research on usability revealed during the symposium. The majority of the research papers and slides are now online for your viewing. One of the most talked about presentations was from three students at the University of Michigan. Their paper was called Analyzing Websites for User-Visible Security Design Flaws. While analyzing 214 web sites which were mostly banks, they found 76% of them having a design flaw that would confuse users or even cause problems for security-savvy users. The CUPS blog has some good notes from this presentation, and there is some decent discussion points on Slashdot. Some other topics that I found interesting:

| ||

| Results: Web Application Security Professionals Survey (July 2008) [Jeremiah Grossman] Posted: 25 Jul 2008 01:10 PM CDT The survey concluded this morning with a simply amazing turn out! A total of 340 respondents -- well over double the previous. Thank you to everyone who helped get the word and of course to those taking the time to fill out the form. This information is invaluable. Since there were so many responses, and hence comments, I'm only able to post the report graphs below. The full report containing all the comments, probably the best part, is available for download (xls). The upside of so much data is I was also able to run reports on people classifying themselves as "Security vendor / consultant", "Enterprise security professional", and "Developers" individually to see how they differed, if at all. If you want to the entire package of reports, here ya go. Big hat tip to Robert "RSnake" Hansen for the bandwidth. And now for my interpretation of the results… Question #1 – 3 Shows that we have a nicely diverse set of individuals with varying backgrounds and years of experience. It looks like I should have had more granular answer options for Q2 though, note for next time.    Question #4 In the matter of browser security I figured just about everyone is using something above and beyond a default install, which is just plain crazy now days and the results confirmed. What astonished me though is the percentage of people across the range using virtualization, roughly 25%! Think about this. 1 in 4 web security people assume their browser and/or OS has a high likelihood of getting owned. Military intelligence, congressional ethics, browser security.  Question #5 In retrospect I should have asked a better PCI-DSS related question, the answers were unsurprising. People in the certain business sectors were influenced by PCI-DSS when it applied to them and they weren't when it didn't. What I really want to know is what ARE the driving factors behind why organizations are investing in web application security. I'll try to figure out a better way to get to that answer set.  Question #6 These answers I found to be really interesting because they were split roughly down the middle and the comments were all over the map. Clearly there is no widely accepted view of what security means in the Web 2.0 software development era. We're still trying to figure things out and convince ourselves that we have the right answer. Or that someone does. I think there is a lot still to be learned in this particular area and I plan to ask more questions on the topic going forward. This also might be an area where we should bring individual experts and practitioners to together to discuss the various issues.  Question #7 I purposely kept the term "vulnerability scanner" vague to see how they performed as an entire category. It doesn't appear that vulnerability scanners have improved much or at least peoples impressions of them since the last survey. They performed dismally in Web 2.0 technologies including Ajax, Flash, and Web services. What surprised me is how well the scanners performed in the persistent XSS category, on par with the non-persistent. I can't say I agree, but it is what it is. Could be an artifact that people don't understand the difference and figure if the tool didn't find it that its not there. The other interesting thing is that developers have a better opinion of scanners than security vendors and enterprise professionals. I plan on digging into this area even more in the future and separate out scanner types, asking for product names, and overall impressions.  Question #8 I was fairly impressed with these results. 1/3 of the respondents said they'd either recommend a WAF, already have a WAF, or had a WAF on the road map. Then half of everyone said they were "Skeptical, but open minded" as compared to a sparse 15% expressing a level of negativity. This should be a huge market indicator for WAF vendors, industry analysts, VARs, and systems integrators. That 50% category represents a huge opportunity to demonstrate a WAFs value and long-term viability. In the next year we'll know which way the trend is heading.  Question #9 Cmon, I had to poke a little fun at RSnake. I mean you gotta know web security is becoming mainstream when you can't automatically win an online Chihuahua beauty contest poll in Austin without getting out haxored at the last second. ;)  Question #10 OK, that settles it. Web security people have little to no respect for McAfee's HackerSafe brand and even that's putting it mildly if you read the comments. I was confused on what the large "other" responses wanted for an option though. There is also a quite unnerving statistic with developers as their answers were split in thirds. Could it be that 1/3 of developers believe HackerSafe means security?  Question #11 And there we have it, web security people don't trust Google, roughly 75% of them anyway. The kicker is most still use them in some way or form anyway. Maybe in many ways we are just like the average user. We'll tend to sacrifice security for convenience just like they do.  Question #12 Some like the idea, some hate it, and others have a love-hate relationship. Either way it appears there's enough people interested in a certification that its time for someone to do it and do it well. Sooner or later there will be 1 or perhaps 2 industry acceptable certifications. Who will it be!? Probably the first one to do it right will.  Question #13 The answers were all over the map and I don't think I asked this question in the best way. Still the majority of C-level executives are at least giving the web security problem a good look. What I'd really like to know, similar to question #5, is what exactly is causing people to care and dedicate more resources. Maybe it's an incident, industry regulation, keeping up with the jones, who knows! I aim to find out.  Question #14 80% of us figured the industry noise was tolerable or we've become number to it. The rest, well, they are not long for the industry anyway. You must get used to it or you'll go crazy, maybe some of us already have.  Question #15 Who's winning? Few think it's the good guys. What more can we say here but that this is really sad and we have our work cut out for us.  Question #16 The forced ranked list. Some were close in the center, but this is what we got. Awareness is still a HUGE issue and I tend to agree. 1) General awareness and education 2) Implementation of an security inside the SDLC 3) Source code analysis 4) Black-box vulnerability assessment / pen-testing 5) Web application firewalls 6) Enforcing industry regulation  Question #17 Read the comments, it's worth it. :) | ||

| Randy Pausch died today [Episteme: Belief. Knowledge. Wisdom] Posted: 25 Jul 2008 12:40 PM CDT I’m sitting in a cafe getting client work done when I looked at my Gmail and the little news ticker across the top had the notification: Randy Pausch died today. I had heard of, but hadn’t seen Pausch’s Last Lecture video when I picked up the audiobook of the Last Lecture and found myself laughing and crying through a long bike ride one Sunday. If you haven’t seen it or heard about it, definitely check it out. His story is pretty incredible, and the message is pretty amazing too. Actually, to abridge it perfectly, there’s a quote in the Yahoo story above: “We don’t beat the reaper by living longer, we beat the reaper by living well and living fully.“ | ||

| The Daily Incite - July 25, 2008 [Security Incite Rants] Posted: 25 Jul 2008 09:48 AM CDT  July 25, 2008 - Volume 3, #64 Good Morning:

Top Security News Greene continues the NAC pile on

Top Blog Postings Remember about Plan B | ||

| The Metasploit DNS vulnerability exploit [Kees Leune] Posted: 25 Jul 2008 08:58 AM CDT Andy IT Guy posed an interesting question and added a poll to it: Should HD Moore have released a Metasploit exploit for the recent DNS vulnerability? I starting my response as a comment to Andy's post, but since my comments tend to run long, I figured I'd make it a post of itself here. Many people have responded: If you can tell, with absolute certainty, that systems are vulnerable to an exploit without needing to test the mechanism, what good is served by releasing weaponized attack code immediately after patches are released, but before most enterprises can patch? Source: Rich Mogull POC code for near-zero day 'sploits is like SPAM advertising penis-extending drugs...the only dick it's helping is the one writing it...My principal feeling on this issue is that it is indeed a good thing to have the exploit available in Metasploit. However, Andy's question has two components: 1) should metasploit have the DNS exploit (YES!), and 2) Was the timing to release it correct. I already answered the first part of the question. The answer to the timing being correct revolves around one thing: adding an exploit to Metasploit after it has been seen in the wild is one thing, but taking the lead in developing it is not the wisest thing to do. Metasploit has developed into a platform that is so well-built and easy to operate that it has become very dangerous to put a new sort of ammunition in it. If the exploit was out in the wild already, and that fact was indeed confirmed, HD Moore's decision to release was valid. If the exploit wasn't out in the wild yet, the release was irresponsible. By releasing the code, we made the lives of the bad guys easier and that is not our job. I have no doubt that the exploit would have been available soon if HD Moore had not released his, but when a platform such as Metasploit reaches so many people, giving them the necessary tools to do bad things is not the most responsible form of full disclosure. Being in the public spotlight brings consequences, and sometimes that means that you have to be the responsible person. | ||

| De-ice.net pentesting live CD's [Kees Leune] Posted: 25 Jul 2008 07:46 AM CDT I have always admired the skills of people who are really good at penetration testing. Trying to gain access to a machine through not-so-obvious ways is something that requires a high degree of technical knowledge, proficiency with tools and a good dose of creativity. I have never been in a situation where I was commissioned to pentest a box myself, but I've dabbled enough with it lately. My first exposure to it came when I took the SANS 504 course on Hacker Techniques, Exploits and Incident Handling. The class ends with a capture-the-flag session that I enjoyed a lot. Since then, I've been keeping an eye open for some other challenges, and I found one at The Last HOPE. One of the speakers mentioned that www.de-ice.net hosts some bootable CD images that are used to teach people pentesting skills. They author of the CD's did a nice job and grouped them in different levels of difficulty. The de-ice CD's are designed to be breakable with the tools included on the Backtrack Live CD. After downloading the images, I was hooked. Unfortunately there are only three CD's out at the moment, but I am proud to say that I managed to win all three challenges. I also admit that I needed some help getting the last one; I was unfamiliar with one of the tools used and needed a little hint. With that last hint, I was able to solve the third and final challenge. If you're into pentesting, or if you would like to get started, I wholeheartedly recommend taking a look at www.de-ice.net. While all the hackable images are Linux-based (due to licensing), they are very informative and fun to do. Don't bang your brains too hard; they progressively get more difficult! | ||

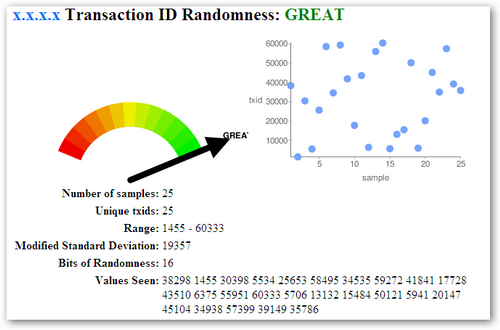

| More DNS Cache Poisoning Testing Tools [Infosec Events] Posted: 25 Jul 2008 12:20 AM CDT Now that public exploits are available for the DNS cache poisoning attack, now is good time to patch your DNS servers if you haven’t already. Some new tools also came out to test if your DNS server is vulnerable to DNS cache poisoning. The web-based DNS randomness test by DNS-OARC is very good, and it outputs pretty graphics. For those that like the command line, you can also use dig and nslookup with the DNS-OARC test.

Update: IBM’s Internet Security Systems blog has a good summary post called ‘Responding to the DNS vulnerability and attacks‘. Update #2: Dan Kaminsky talked about the DNS vulnerability more in a Black Hat Webcast. Related Link: Is Your DNS Server Vulnerable To Cache Poisoning? | ||

| The ARP Lowdown [CTO Chronicles] Posted: 24 Jul 2008 05:09 PM CDT Likely the single most controversial part of the Mirage NAC product involves its use of ARP. Stiennon referred to "ARP twiddling" as our means of quarantine during our recent, er, discussion on the usefulness of NAC. As tempting as it was to respond to the comment in the thread, I thought perhaps it was better to leave it for its own post. So, what's up with our use of ARP? Why do we do it this way and why haven't we moved more quickly to one of the more common means of quarantining endpoints? Here's the lowdown that before has been kept more on the down-low. More than just quarantine. One of the first discussions we (at least I) end up having with prospects surrounds the notion of device state. The knowledge of network entry and exit forms the bookends of the NAC process. Any NAC solution must have this basic notion in order to provide the necessary governance. To be sure, many options exist. Some solutions take alerts from the switching infrastructure (SNMP traps, Syslog messages, whatever) based upon either the link state of the port or the population of the bridging tables. Others may have a RADIUS hook, on the assumption that any device that's connecting is authenticating in one way or another. Still others may have a DHCP hook and use address assignment as the state trigger. Finally, some of the inline solutions simply wait to see traffic flow through them. There are three basic reasons we like ARP for this: First, and most importantly, it's immediate since it's part of the initial stack initialization. Second, it's independent of the switching infrastructure, in that it works the same way regardless of downstream switches, whether the switches can send traps, etc.) Third, it's independent of endpoint characteristics, such as OS type and whether the address is statically assigned or dynamically assigned. But also quarantine Yes, we use ARP for quarantine. The marketing side of the house prefers "ARP Management" to "ARP Poisoning" or "ARP Twiddling." I don't especially care. The best way to enumerate why we do it this way is to back up and review, at a slightly higher level, what we think quarantining should be and mean: Quarantining should be fast This almost seems like it could go without saying. Whether effected for the purposes of device-specific remediation or more generalized network protection, time is both money and risk. A quarantining method that takes, for example, seconds to put in place is, at least to us, a non-starter. Quarantining should be holistic One of the fundamental disagreements I have with Stiennon's notion of network security (rant alert) is that packet (as opposed to endpoint connection) dropping is sufficient as a mitigation method. With a threat blended into, say, a bot (with an https control channel), a file-sharing based worm, a keystroke logger that may be logging data but not sending it, and a spam relay, the very notion of separating "good" traffic from "bad" traffic is silly. Take even one of the highly outdated threats (and quaint, by today's standards) threats like Blaster and Welchia. The "bad traffic" in those cases was Windows networking traffic, whose primary usage was *inside* the infrastructure. So then a "good traffic/bad traffic" policy removes windows networking functions from the connection, which removes access to both file shares and (assuming Exchange) corporate email. Now then, how useful, really, is that endpoint's connection? Well, they can get their stock quotes. And they can get to internal portal-based applications. Boy, there's a great idea. "I see, Mr/Ms user that you're infected with malware; welcome to my Oracle Financials application." How does that idea pass muster with anyone? Quarantining should be full-cycle This may or may not be a point of debate, but we continue to believe that a quarantine mechanism that is only available at admission time is wholly inadequate. This is the "main" thing keeping us from leveraging 802.1x as a quarantine mechanism (the lack of this capability in 802.1x environments has been a rant of mine before, since the RFC that would allow for this is 5 years old). As much as I like the idea of enforcement at the point of access, I simply do not see how it's workable unless and until it's possible to revoke previously granted access based on policy. Quarantining should be transparent I put this last since I think it's one with the largest amount of wiggle room. The thing I always liked the least about SNMP and CLI based VLAN moving is that there remains the need to get the endpoint to go request a new address as a result of the VLAN change. Similarly, the best that DHCP can offer is to play around with the timing elements, but that's not the same as the ability to do quarantine on-demand per policy. Not to beat a dead horse or anything, but RFC 3576 would get us there if the switch vendors would just implement it. Did I mention that the RFC is 5 years old? So, there you have it. We use ARP for state because it's fast, robust and hard to bypass. We use ARP for quarantine because, quite frankly, we've yet to see any other quarantine mechanism that can fit the bill. PS I almost forgot. The main knock against our "ARP twiddling" approach, beyond just the philosophical objection, is that it's easy to bypass. Is it? Some methods may be. Ours is not. Not from our own internal testing, and not from installations spanning 550 customers across 38 countries. And, yes, we thought of the static cache-entry trick already. | ||

| Going to defcon after all [Kees Leune] Posted: 24 Jul 2008 02:33 PM CDT It turns out I'm going to DefCon after all. It will be my first DefCon and my first trip to Las Vegas. As a matter of fact, it will be my first trip to Pacific Time ;-) I'll get in Thursday evening late (midnight) and I will leave again Sunday evening (9pm-ish). I am also looking forward to meeting some of the people I've been talking to online for a long time now! T minus two weeks and counting. | ||

| Buone ferie... [varie // eventuali // sicurezza informatica] Posted: 24 Jul 2008 12:03 PM CDT Mi assenteró per qualche tempo per il meritato riposo estivo. Non dico il blog sará "chiuso per ferie", ma certamente daró piú segni di vita su twitter che qui. Un saluto ai lettori, ai colleghi, agli amici, agli amici blogger, agli amici che leggono perche é il mio blog anche e non s'interessano di sicurezza, ai commentatori anonimi e quelli col nome. Come al solito, non metto l'out of office autoreply alle email quindi saluti anche quelli che stanno leggendo qui perche non ho risposto a qualche email e si chiedono se sono vivo. | ||

| Extended Laundry List - July 24, 2008 [Security Incite Rants] Posted: 24 Jul 2008 07:36 AM CDT Good Morning:  The Extended Laundry List

Photo credit: "The Addam's Family Laundry" originally uploaded by DanielaNob | ||

| Assessing your Organization’s Network Perimeter (pt. 3) [BlogInfoSec.com] Posted: 24 Jul 2008 06:00 AM CDT Welcome once again to the risk rack. This time on the risk rack we will be continuing our review of how to assess your organization's network perimeter. As a reminder the identified steps were:

In Part I we reviewed tips and tricks for step 1 "Define the functions and purposes of your network perimeter" and started a spreadsheet. In Part II we looked at tips and tricks for Step 2: "Assess the technology used along the perimeter of your network." In Part III we will be looking at tips and tricks for step 3 "Assess the Processes used to support your network perimeter." Processes are an important element of any program and provide the overall framework and ongoing guidance to ensure the program operates as prescribed, and effectively. Formal processes to specifically support the network perimeter are often integrated with other processes and almost never centralized to ensure that there is a cohesive flow. Since the network perimeter is often the most vulnerable point of an organization's operating environment it is important that the processes that support its operation and security are developed with a focus to ensure that they are comprehensive and performed effectively. When doing a review of processes it is important to speak to the key personnel that either own or support the network perimeter and ask them what they do to support the network perimeter. During the conversations you should ask them (...) © Frank Cassano for BlogInfoSec.com, 2008. | Permalink | No comment This feed is copyrighted by bloginfosec.com. The feed may be syndicated only with our permission. If you feel that this feed is being syndicated by a website other than through us or one of our partners, please contact bloginfosec.com immediately at copyright_at_bloginfosec.com. Thank you! | ||

| these tubes are quick [extern blog SensePost;] Posted: 24 Jul 2008 04:57 AM CDT Kaminsky's thunder has all but evaporated into a fine mist, and Ptacek has gone all silent. In the meantime, the MetaSploit crowd put their heads down and produced: http://www.caughq.org/exploits/CAU-EX-2008-0003.txt DNS poisoning for the masses. (If anything ever deservered the tag 'infosec-soapies', this would be it!!!) | ||

| Metasploit Bailiwick DNS Exploit Adds Domains [RioSec] Posted: 24 Jul 2008 01:13 AM CDT Overnight the Metasploit DNS exploit module continues to evolve to more devistating effect. Perhaps most importantly, a new module was introduced based on feedback from Cedric Blancher named Auxiliary::Spoof::Dns::BailiWickedDomain, which replaces the nameservers for a domain, allowing an attacker to redirect all traffic for the entire domain through them. Showcasing the ease of use of the Metasploit Framework, this entire exploit is written in 330 lines, including comments! | ||

| When your flight is DOA [StillSecure, After All These Years] Posted: 24 Jul 2008 12:04 AM CDT Last night I wrote about my first day of this weeks road trip and my hotel which doubled as a funeral parlor. Now it is Wed night and I am live blogging from the runway of DC Regan-National airport, on board a Delta flight which has been on this same runway and not moved for the past 2 and a half hours. I say I am live blogging this, but of course you are not live reading this. That is because I have no way to upload this to my server. You see the iPhone 3G for all the coolness, has no Internet sharing that I am aware of. My old windows mobile phone had Internet sharing and if I still had that you would be reading this live right now. But no, not with the iPhone. I was scheduled to connect in Cincinnati right about now. I am obviously missing that connection. I was flying from there to Columbus and driving about an hour and half from Columbus. I have a 9am meeting tomorrow. So unless I feel like renting a car and driving 4 hours whenever it is I land, I am pretty much missing my meeting tomorrow as well. What to do? a). Should I break out of this plane, run to the terminal and try to get on a flight home to Florida b). Go postal or c). Grin and bear it and try to remember that I love what I do and that is what flying in the summer is all about (actually that summer thing is full of beans, it is no better in winter with weather either!). So here is the update, we sat on the runway for 4 hours! Finally took off and landed in Cincinnati at midnight. I had no connection. Could not get a flight out in the morning, not rent a car and most hotels sold out. I am writing this from the coffee shop of the lovely (and I do mean lovely) Drawbridge Inn. I will miss my meeting in the morning and am booked on a flight home tomorrow. Ah, the life of a road warrior! | ||

| Posted: 23 Jul 2008 11:57 PM CDT I have written before about what a joke I think it is when people write that Microsoft's best days are behind it and that their corporate grave is already being dug. Google is going to usher in a new age of net centric computing and topple the once and future king. Yeah sure. Don Dodge had a good article up the other day about Microsoft's recent end of FY numbers. The Redmond rockets racked up over 60 billion (yeah with a b) in revenue last year, an 18% increase over the year before! They dropped 17.6 billion (again with a b) to the bottom line. To give it some perspective, Yahoo all told only does about 7 or 8 billion in gross revenue a year. Microsoft grew 9 billion in revenue last year. That is they grew organically more than a whole Yahoo. You can check out Don's article for more financial facts and figures. I ask you ladies and gentlemen, does this sound like the numbers of a company on the way down? If you were a betting person, would you be betting against this monster? I would not be. Do you think by 2011 things are going to fundamentally change? Next time someone tells you how open source, Linux, Google or anyone else is going to kill Microsoft try to put some of these numbers in prospective. |

| You are subscribed to email updates from Black Hat Security Bloggers Network To stop receiving these emails, you may unsubscribe now. | Email Delivery powered by FeedBurner |

| Inbox too full? | |

| If you prefer to unsubscribe via postal mail, write to: Black Hat Security Bloggers Network, c/o FeedBurner, 20 W Kinzie, 9th Floor, Chicago IL USA 60610 | |

No comments:

Post a Comment